Plotting

Running your quasi-Seldonian algorithm one time and observing that it found a solution is encouraging—certainly more so than if it returned No Solution Found (NSF)! However, it doesn't tell us much about the algorithm's behavior:

- How much performance (mean square error minimization) is lost due to the behavioral constraints?

- How much data does it take for the algorithm to frequently return solutions?

- How often does the algorithm exhibit undesirable behavior?

Performance Loss

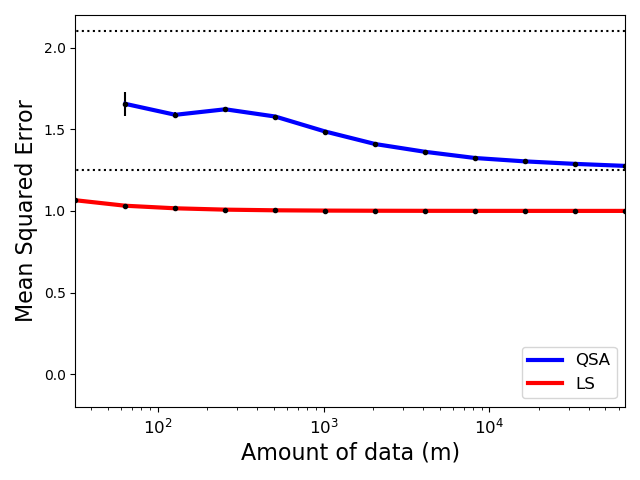

An algorithm that guarantees that it is safe and/or fair will typically have slightly worse performance than an algorithm that focuses purely on optimizing performance. The left column of plots in Figure 3 of our paper shows the slight decrease in predictive accuracy of our (quasi-)Seldonian classification algorithm due to enforcing the behavioral constraints. Similarly, in the supplementary materials of our paper, Fig. S19 shows how ensuring fairness in our GPA regression example results in a slight increase in the mean squared errors of predictions. For the problem we are solving, we will certainly increase the mean squared error, as our second behavioral constraint explicitly requires this. Still, it is worth comparing the mean squared error of the solutions returned by QSA to the mean squared error of the solutions that would be returned by a standard algorithm, in this case least squares linear regression (LS).

Each of the plots below show how different properties of the algorithm vary with the amount of data, \(m\). We first plot performance (MSE) for different amounts of data. Notice that:

- We use a logarithmic scale for the horizontal axis, which means that we run our algorithm with values of \(m\) starting at \(m=32\) and going up to \(65,\!536\), doubling each time. For each value of \(m\), we run \(1000\) trials.

- From each of these trials we store the MSE of the solution returned by

QSA(the quasi-Seldonian algorithm you've written) and byleastSquares(ordinary least squares linear regression).

This raises the question: how should we compute the mean squared errors of estimators (both the estimators produced by our algorithm, and the estimators produced by least squares linear regression)? Typically, we won't have an analytic expression for this. However, for synthetic problems we can usually generate more data. We will evaluate the performance and safety/fairness of our algorithm by generating significantly more data than we used to train the algorithm. Specifically, in our implementation we train with up to 65,536 samples, and use 100 times as many points (around 6,500,000 samples) to evaluate the generated solutions.

The MSE plot that we obtained is below, which includes standard error bars and thin dotted lines to indicate the desired MSE range of \([1.25,2.0]\).

- Notice that QSA doesn't return a solution for small amounts of data, and so its curve does not begin at the far left where \(m=32\).

- Notice that the MSE of the solutions returned by QSA starts closer to the middle of the acceptable range, and then tends down towards the lower boundary. This is because the primary objective function encourages solutions with lower MSE. With more data, QSA can return solutions with MSE closer to \(1.25\), while having the necessary confidence that its MSE remains above \(1.25\).

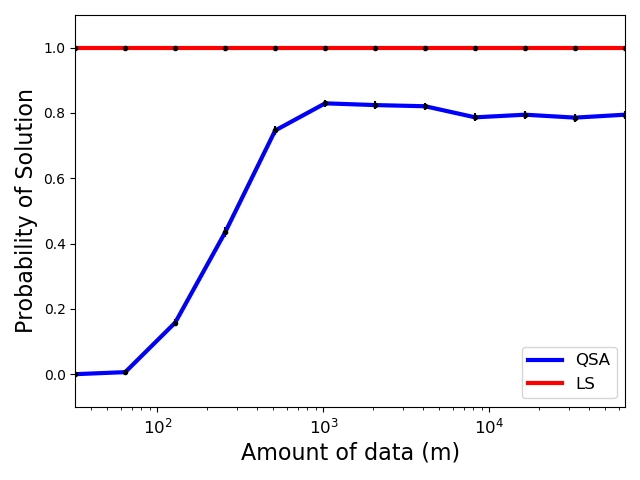

Probability of Solution

The previous plot made it clear that QSA didn't always return a solution. How much data does QSA require to return a solution for this problem? To answer this, we create a plot similar to the one above, but reporting the probability that each method returned a solution.

- Notice that LS always returns a solution, as it does not have the capacity to say "No Solution Found".

- Notice that QSA does not return solutions with little data: this is because it does not have sufficient confidence that any solution would have MSE above 1.25 and below 2.0. As the algorithm obtains more data, the probability that it returns a solution increases and stabilizes around 80%.

- In our upcoming paper at NeurIPS we prove, for a similar algorithm, that if a solution \(\theta\) exists that satisfies \(g_i(\theta) < 0\) for all \(i \in \{1,2,\dotsc,n\}\), then in the limit as the amount of data tends to infinity, the probability that it returns a solution goes to one. In this case, we call that algorithm consistent. It remains an open question whether the QSA algorithm (assuming the candidate selection mechanism finds a global maximizer of the candidate objective) is consistent.

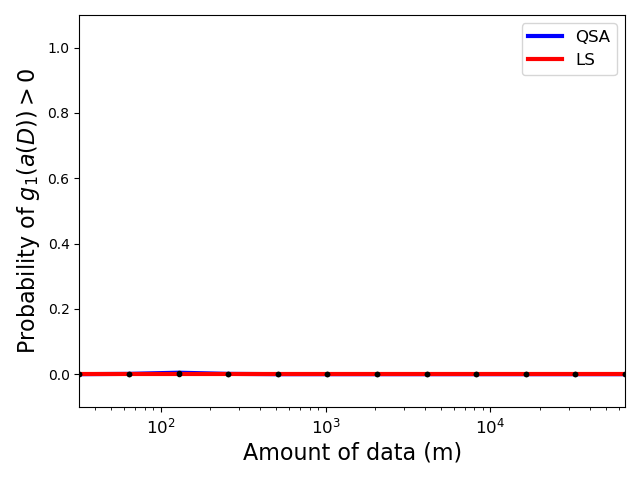

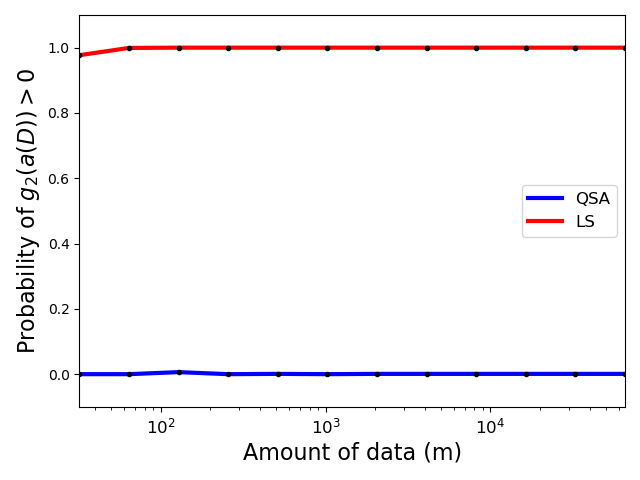

Probability of Undesirable Behavior

Next, we plot the probability that each algorithm produced undesirable behavior: i.e., the probability that each algorithm caused \(g_1(a(D)) > 0\) and the probability that each algorithm caused \(g_2(a(D)) > 0\).

- Recall that \(\delta_1=\delta_2=0.1\), so these probabilities should both be below \(0.1\) for QSA (or at least around \(0.1\), due to our reliance on a normality assumption when using Student's \(t\) statistic).

- Since Least Squares (LS) does not take the behavioral constraints into account, it will frequently produce what we have defined to be undesirable behavior.

- Notice that LS frequently violates the second constraint (figure on the right), producing solutions with MSE below \(1.25\). Of course we shouldn't expect any other behavior of LS, as it was not tasked with ensuring that the MSE is above \(1.25\) and below \(2.0\).

- The curve for

QSAis the one of more interest: if it goes above \(0.1\), the algorithm did not satisfy the behavioral constraints (this could in theory happen here due to our reliance on the aforementioned normality assumption). The curve forQSAis not completely flat at zero: it goes up to a value of \(0.005\), which is well below our threshold of \(0.1\).

Source Code

You can download our source code to create the plots on this page by clicking the download button below:

C++:

This .zip file includes both our updated main.cpp and the outputs we obtained in the directory /output, along with Matlab code to create all of the plots in this tutorial (simply run main.m in Matlab). Other than the line #include<fstream>, all of our changes are below the QSA function. Notice in int main(...) that for two different values of \(m\), say \(m_1\) and \(m_2\) where \(m_1 < m_2\), within the same trial the first \(m_1\) samples in the training data will be the same for both values of \(m\). This use of common random numbers reduces the variance in plots.

Python:

To run this demo, you will need two additional libraries: Ray, to allow our code to run in parallel, and Numba, a Just-in-Time (JIT) compiler that accelerates Python code.

This .zip file includes both the original main.py file, for running our first, simpler experiment, and main_plotting.py, for reproducing the plots and analyses above. The results of each experiment (including output CSV files) are stored in the experiment_results/ folder. To generate the graphs above, please run main_plotting.py and then create_plots.py. All plots are saved in the images/ folder.